Policy-as-Code - Your Path to Secure and Compliant Cloud Infrastructure

14.11.2023 | 12 min read

In the era of advanced security tools and modern infrastructure it's easy to get caught up in the excitement of sophisticated solutions. Yet, when you embark on creating your infrastructure, understanding the fundamental principles of security is the first step to ensuring a robust foundation that minimizes the need for extensive testing and remediation.

Foundational Principles of Infrastructure Security

Here are the core principles that serve as pillars of infrastructure security:

Principle of Least Privilege (PoLP)

PoLP is a fundamental security concept that emphasizes granting users the minimum level of access or permissions required for their specific job functions. This principle, closely tied to access control, encourages a precise allocation of privileges. Consider, for instance, the practice of avoiding granting '*' access to all team members or using the root user inside Docker containers.

Shared Responsibility Model

The shared responsibility model defines the boundaries between a Cloud Provider and its Users (Administrators). By clarifying each party's responsibilities, this model eases the operational burden on customers. Cloud Providers manage and secure components from the infrastructure down to physical facilities, while customers are accountable for guest operating systems, including updates and security patches.

Data Encryption (In-Transit and at Rest)

Protecting data throughout its lifecycle is crucial. Implementing Transport Layer Security (TLS) and encryption mechanisms safeguards data from unauthorized access in case of leaks or misconfigured access controls.

"Do Not Make Public If It May Stay Private"

This principle advocates for a default stance of keeping data private and selectively making it public only when necessary. For example, AWS S3 buckets and Kubernetes clusters should initially be configured as private, with public access granted only for specific use cases.

Keep the Secrets Secret

To maintain security, confidential data must never be stored in a public or easily accessible manner. Storing secrets, such as credentials or sensitive information, in plain text within even private repositories is a practice to avoid at all costs.

By grasping these foundational principles, you lay the groundwork for a secure infrastructure that not only minimizes vulnerabilities but also simplifies the management of security.

In an eye-opening article titled "Infrastructure-as-code templates are the source of many cloud infrastructure weaknesses" alarming statistics from the year 2020 come to light:

- Over 10% of S3 storage buckets defined in templates were publicly exposed.

- 43% didn't have encryption enabled.

- 45% had no logging enabled.

- 22% of Terraform templates and 9% of Kubernetes YAML configuration files exhibited vulnerabilities.

But there's more to the story. These statistics underscore the pressing need for remedies to address configuration issues in cloud infrastructure. That's where the tools mentioned in our previous article, Terraform Security Tools — Spend Minutes, Save Millions come into play. These tools meticulously examine your Terraform code, identifying security vulnerabilities, malformed syntax, and more. As the title suggests, the potential cost savings are substantial, as exposed infrastructure can lead to significant financial consequences.

But why stop at Terraform? What about Kubernetes manifests? Docker Images? Gateway configurations? To comprehensively address these concerns, we can introduce Policy-as-Code frameworks. These tools enable us to define, manage, and enforce policies using code, extending our reach to encompass a broader spectrum of infrastructure and application configurations.

Cloud Policies of "The Big Three"

Before we start exploring tools that can help us maintain a policy-aware infrastructure, let's examine what we can configure within the 'big three' cloud providers. Each of them offers a service related to what is commonly known as 'policy.' In AWS, we have Organization Policies; in Azure, we have Azure Policies, and in GCP, we have something similar through the Organization Policy Service.

AWS Organization Policies

First, let's focus on AWS Organizations Policies. These policies, offered by Amazon Web Services (AWS), play a pivotal role in simplifying governance, resource management, and security across multiple AWS accounts. In this chapter, we'll delve into how AWS Organizations Policies help organizations prevent resource sprawl, optimize costs, and strengthen security.

Additionally, we'll explore the implementation of 'AI services opt-out policies' and 'Backup Policies' to provide a comprehensive view of governance within AWS Organizations.

- Service Control Policies which allows to organize the permissions per organization. It offers the central control over the maximum available permissions for all accounts in your organization. With such kind of permissions we may Deny or Allow for certain actions per AWS Account.

- AI services opt-out policies which allows us to opt-out of having our content of AI services (Amazon Rekognition, Amazon CodeWhisperer etc.) to be used for the improvement of them.

- Backup Policies which enables you to set up backup policies in AWS Organization and attach JSON documents per root, organizational units (OUs), and individual accounts. We can define backup frequency, the time window and other features. We all know that there are two groups of people - “those who have backup and those who will have it” 🙂. So, it is good to set it up.

AWS Organizations Policies offer a robust framework for governing AWS accounts, resources, and permissions across your organization. By enforcing standardized resource tagging, optimizing costs through SCPs, enhancing security with uniform policies, implementing "AI services opt-out policies" for data privacy, and establishing "Backup Policies" for data protection, you can achieve better cloud governance and operational efficiency.

Azure Policies

Now, let's delve into how Azure Policies, a powerful feature of Microsoft Azure, can help you prevent unnecessary resource sprawl, reduce costs, and bolster your cloud security. Azure Policies are a set of rules and guidelines that allow you to enforce specific requirements, standards, and best practices within your Azure cloud environment. These policies are expressed as code and can be written in JSON or by using Azure Policy initiatives.

Incorporating Azure Policies into your cloud governance strategy can be a game-changer. By codifying your policies and automating their enforcement, you not only prevent unnecessary resource creation but also optimize costs and enhance security.

The main features of Azure Policies are:

Customizable Rules

Azure Policies enable you to create highly customizable rules tailored to your organization's specific needs. Define policies that match your compliance standards and operational requirements.

Prevention of Resource Misconfiguration

Ensure that resources are provisioned according to your organization's standards, eliminating the risk of misconfigurations that can lead to security vulnerabilities.

Automated Compliance Checks

Azure Policies automatically assess your resources for compliance with defined rules. This continuous monitoring keeps your environment in check and helps you maintain compliance.

Integration with Azure Initiatives

Leverage Azure Policy initiatives to group related policies together. This simplifies the management of complex compliance requirements and streamlines policy enforcement.

Scalable Enforcement

Whether you have a handful of resources or a vast cloud infrastructure, Azure Policies scale effortlessly to enforce rules across your entire Azure environment.

Comprehensive Reporting

Gain insights into policy compliance through detailed reporting. Identify areas of non-compliance and take proactive measures to address them.

Real-time Validation

Policies are evaluated in real time, ensuring that resources adhere to policy requirements from the moment they are created or modified.

Enforcement Across Resource Types

Azure Policies can be applied to a wide range of Azure resources, including virtual machines, databases, storage accounts, and more.

Incorporating these features into your cloud governance framework equips you with the tools needed to maintain control, security, and compliance in your Azure environment. Azure Policies not only help you meet regulatory requirements but also enhance operational efficiency, making them an essential component of your cloud strategy.

This extended version provides readers with a more in-depth understanding of the key features and benefits of Azure Policies, making the section more informative and comprehensive.

Google Organization Policies

As we continue our exploration of "Policy as Code" in the cloud, let's shift our focus to Google Organization Policies. These policies, offered by Google Cloud, are instrumental in streamlining governance, resource management, and security across Google Cloud environments. In this chapter, we'll delve into how Google Organization Policies empower organizations to prevent resource sprawl, optimize costs, and enhance security.

- Google Organization Policies allow you to centrally and programmatically control your organization’s cloud resources by configuring constraints across your entire resource hierarchy.

- Constraints are a type of restriction that apply to one or more Google Cloud services, and determine how they can be configured. For example, you can use constraints to limit resource sharing based on domain, limit the usage of Identity and Access Management service accounts, or restrict the physical location of newly created resources.

- Organization policies are inherited by the child resources of the resource where they are set, such as folders or projects. You can also override or disable organization policies at lower levels of the hierarchy, depending on the constraint type and your permissions.

- Organization policies help you enforce compliance and conformance with your organization’s policies and regulations, as well as improve security and reduce costs by preventing resource sprawl and misuse. You can also monitor and audit the organization policies applied to your resources using Cloud Logging and Cloud Audit Logs.

Examples of use

The above Cloud Policy Services may be used in various settings in our organization. Let’s present some real life scenarios where we may use them.

Preventing Resource Sprawl

One of the primary challenges in multi-account AWS environments is resource proliferation. Teams might create resources without adhering to best practices or cost controls. AWS Organizations Policies provide a robust framework to address this issue.

Scenario: enforcing tagging standards

Suppose your organization requires all resources to be tagged with specific metadata for cost allocation and tracking. With AWS Organizations Policies, you can mandate the use of these tags across all AWS accounts. Resources without the required tags are subject to automatic remediation or denial.

Cost Optimization Through Service Control Policies (SCPs)

AWS Organizations introduces Service Control Policies (SCPs) that allow you to set fine-grained permissions and restrictions across accounts. SCPs can help you optimize costs by controlling access to AWS services and features.

Scenario: restricting access to costly services

You can create an SCP that restricts access to expensive AWS services like Amazon Redshift or Amazon RDS to specific accounts or teams. This prevents accidental resource launches incurring unexpected costs.

Scenario: Virtual Machine Shutdown Policy

In non-production environments, it's common for virtual machines (VMs) to be left running when not in use, leading to unnecessary costs. Azure Policies can enforce a rule that mandates the shutdown of VMs during non-business hours or on weekends. By automating this process, you can significantly reduce your Azure bill without manual intervention.

{

"if": {

"allOf": [{

"field": "type",

"equals": "Microsoft.Compute/virtualMachines"

},

{

"not": {

"field": "tags.AutoShutdown",

"equals": "true"

}

}

]

},

"then": {

"effect": "modify",

"details": [{

"field": "tags.AutoShutdown",

"value": "true"

}]

}

}

Conclusion

In this comprehensive exploration of cloud governance through Organization Policies in AWS, Azure, and Google Cloud, we've uncovered how these cloud giants empower organizations to take command of their resources, enhance security, and optimize costs. Let's recap the key takeaways from our journey.

Amazon Web Services (AWS) offers a versatile array of Organization Policies that enable organizations to:

- Prevent Resource Sprawl: Enforce tagging standards, ensuring resources are consistently labeled for cost allocation and tracking.

- Cost Optimization: Utilize Service Control Policies (SCPs) to control access to costly services, preventing unexpected expenses.

- Security Reinforcement: Implement security best practices, such as multi-factor authentication (MFA) requirements and access controls, and extend to "AI services opt-out policies" and "Backup Policies" for data protection.

Microsoft Azure equips organizations with the following capabilities through Azure Policies:

- Resource Governance: Enforce resource tagging standards to streamline resource management and cost allocation.

- Cost Control: Optimize cloud spend by restricting access to expensive services, ensuring budget-friendly cloud operations.

- Enhanced Security: Implement consistent security policies, enforce compliance, and extend security measures with "AI services opt-out policies" and "Backup Policies."

Google Cloud Organization Policies empower organizations to:

- Centralized Governance: Centrally manage and enforce policies across Google Cloud resources and projects.

- Resource Control: Prevent resource sprawl by enforcing labeling standards and restricting non-compliant configurations.

- Access Control: Implement IAM policies to control access and enhance security, along with "AI services opt-out policies" and "Backup Policies" for comprehensive governance.

Across AWS, Azure, and Google Cloud, Organization Policies have emerged as indispensable tools for cloud governance, offering a unified approach to:

- Centralization - apply policies consistently across cloud environments.

- Customization - tailor policies to meet unique organizational requirements.

- Compliance - ensure adherence to regulatory standards and industry best practices.

- Cost optimization - streamline resource allocation and access to minimize cloud spend.

- Security - strengthen security measures and data protection.

By embracing these policies, organizations can unlock the true potential of the cloud, achieving operational efficiency, cost savings, and robust security in an increasingly complex cloud landscape.

As organizations continue their cloud journeys, mastering the art of "Policy as Code" will be pivotal to successful cloud governance. Stay tuned for more insights and best practices as we navigate the ever-evolving world of cloud management.

Cloud Policy enforcement tools

Now, let's take our exploration to the next level by diving into the world of cloud policy enforcement tools. These tools, exemplified by Polaris and Open Policy Agent (OPA), empower organizations to transform policies into actionable and automated controls within their cloud infrastructure.

As we delve into this chapter, we'll discover how these tools work in tandem with cloud providers' native policies, offering enhanced flexibility, customization, and real-time policy enforcement. Whether you're looking to validate Kubernetes configurations, ensure compliance across multi-cloud environments, or extend your policy enforcement to the application level, these tools have you covered.

Let's embark on this journey to understand how Polaris and Open Policy Agent can help you supercharge your cloud governance efforts, providing you with the means to turn policies into reality and ensure that your cloud environment aligns with your organization's standards and security practices.

Polaris

The first application we would like to check is Polaris by Fairwinds. As it is written in the documentation:

Polaris is an open source policy engine for Kubernetes that validates and remediates resource configuration.

Polaris

It includes 30+ built in configuration policies, as well as the ability to build custom policies with JSON Schema. When run on the command line or as a mutating webhook, Polaris can automatically remediate issues based on policy criteria.

So, basically, we don't need to write any dedicated policies or configure this service. After installing it, for example, via Helm, we can generate a report that covers our Kubernetes services. I ran it on one of my clusters, and the results were... not very optimistic. 😅 I mean, the tool worked fine, but I noticed that the services in the cluster did not have proper configurations. Let's examine a few of them.

The first report shows a global grade, which seems to be fine - I got a B, which is kind of a good result. As we see the cluster was tested by 980 checks which most of them passed.

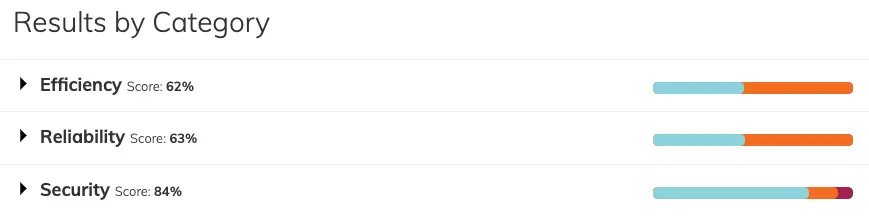

When we scroll down we will see three general sections

- Efficiency is mostly related to the CPU and memory settings, whether we have set requests and limits

- Reliability is mostly related to the availability of workloads

- Security mostly covers RBAC setup and the vulnerabilities and privileges escalation

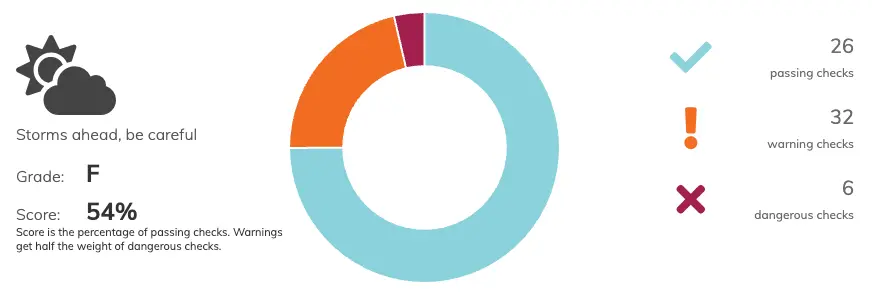

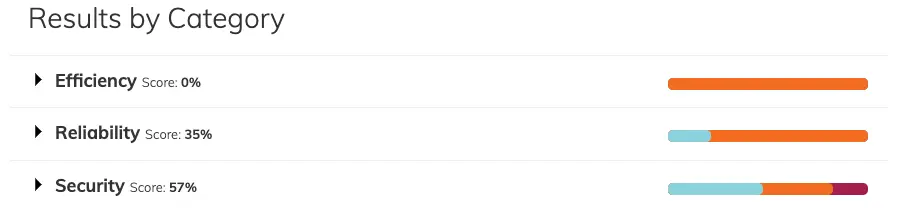

Above results show that the cluster is secured quite enough but there are some gaps related to the efficiency and reliability. This image changes when we filter per Namespace and choose the one which contains our backend microservices.

The F grade result is not something that I could be proud of. Above categories are also quite pessimistic.

So, what could I do in that case? Polaris gives us hints on what to do to improve the grade. The below example shows some warnings with the information about the problem. This could guide us on what to fix.

Polaris gives us the possibility to add custom checks. We may add them via our additional config map. As an example, we may take the one below, which disallows images from quai.io.

checks:

imageRegistry: warning

customChecks:

imageRegistry:

successMessage: Image comes from allowed registries

failureMessage: Image should not be from disallowed registry

category: Security

target: Container

schema:

'$schema': http://json-schema.org/draft-07/schema

type: object

properties:

image:

type: string

not:

pattern: ^quay.ioAs we see, with that kind of tool we may well start our adventure with Policy-as-Code. The next tool is more advanced but it provides a lot of possibilities for the configuration and covers more than only Kubernetes.

Open Policy Agent (OPA)

The Open Policy Agent (OPA) is an open-source, general-purpose policy engine that unifies policy enforcement across the stack. It provides a high-level declarative language called Rego to specify policy as code and simple APIs to offload policy decision-making from your software. OPA allows you to enforce policies in various contexts such as microservices, Kubernetes, CI/CD pipelines, API gateways, and more. It was originally created by Styra and is now a graduated project in the Cloud Native Computing Foundation (CNCF) landscape.

The main features of this tool are:

- Policy decoupling: OPA decouples policy decision-making from policy enforcement. When your software needs to make policy decisions, it queries OPA and supplies structured data (e.g., JSON) as input. OPA accepts arbitrary structured data as input and generates policy decisions by evaluating the query input against policies and data. Your policies can generate arbitrary structured data as output.

- Domain-agnostic policies: OPA and Rego are domain-agnostic, allowing you to describe almost any kind of invariant in your policies. For example, you can define policies for access control, network traffic rules, workload placement, binary download restrictions, container capabilities, system access times, and more.

- Flexible integration: OPA provides simple APIs that allow you to integrate it with various systems and services. You can use OPA’s APIs to offload policy decision-making from your software and enforce policies at different layers of your stack.

If we compare it with the previously presented Polaris we may see that there are some different focuses and use cases:

- OPA is a general-purpose policy engine that can be used across different contexts such as microservices, Kubernetes, CI/CD pipelines, API gateways, and more

- Firewinds Polaris is an open-source policy engine specifically designed for Kubernetes. It validates and remediates Kubernetes resources to ensure configuration best practices are followed

Both tools have their strengths and can be used together depending on your specific requirements. If you are working with Kubernetes and want to ensure configuration best practices are followed, Firewinds Polaris might be a good fit. On the other hand, if you need a general-purpose policy engine that can be integrated into various systems and services, OPA would be a suitable choice.

Example:

This policy checks if a Kubernetes Pod contains the requests and limits set for CPU and memory resources. If the requests and limits are not set, the policy denies the Pod creation request and returns an error message.

package kubernetes.admission

import data.kubernetes.namespaces

deny[msg] {

input.request.kind.kind == "Pod"

container := input.request.object.spec.containers[_]

not container.resources.requests.cpu

not container.resources.requests.memory

not container.resources.limits.cpu

not container.resources.limits.memory

msg := sprintf("container %v does not have resource requests and limits set", [container.name])

}Summary

In this comprehensive exploration of cloud governance, we traveled through the intricacies of Organization Policies offered by the "big three" cloud providers—AWS, Azure, and Google Cloud. We learned how these policies provide a sturdy foundation for centralized governance, cost optimization, security reinforcement, and compliance assurance.

Across these cloud platforms, Organization Policies have emerged as essential tools for streamlining cloud operations, maintaining control, and ensuring adherence to organizational standards and industry regulations. Whether it's enforcing resource tagging standards, optimizing cloud spend, or enhancing security measures, these policies pave the way for efficient and secure cloud management.

As you continue your cloud journey, remember that effective governance not only ensures operational efficiency and cost savings but also fortifies your cloud infrastructure against emerging threats and regulatory challenges. Embrace the power of policies and tools to navigate the cloud landscape with confidence, knowing that you have the tools and knowledge to maintain control and compliance.

Thank you for joining me on this journey through the intricacies of cloud governance. As the cloud landscape evolves, stay tuned for more insights and best practices to help you navigate the ever-changing world of cloud management.