Voice detection that works

Our first attempt measured audio volume to detect speech. This failed immediately because keyboard clicks triggered false positives, quiet speakers were missed entirely, and background noise caused complete chaos.

We switched to Silero, a machine learning model that detects speech patterns instead of volume. It runs in the browser and filters out non-speech sounds automatically.

We added two-phase processing that starts transcribing at 250ms and confirms at 600ms. This prevents the system from interrupting candidates mid-thought while keeping response times fast enough to feel natural.

Stopping hallucinations

Whisper invents phrases like "Thanks for watching!" and "Subscribe to my channel" when you send it silence or background noise.

We built four-layer prevention:

- Only transcribe actual speech patterns

- Track when the avatar speaks to prevent echo

- Require minimum duration and data size

- Clean audio before transcription

This approach reduced false transcriptions by 99%.

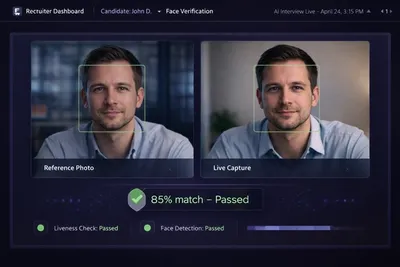

Identity verification

Candidates upload a photo with their application. The system verifies they match that photo during the interview.

We built this using DeepFace and OpenCV so everything processes locally. There are no cloud APIs involved and no data storage issues, making the system GDPR compliant by design.

Anti-spoofing models detect printed photos, phone or tablet screens, video playback, and 3D masks. The system doesn't block real candidates, but it flags suspicious cases for human review.

Fair assessment

Four questions assess fluency, vocabulary, comprehension, and grammar. CEFR scoring from Beginner to Native.

Three questions about experience and skills. Each references previous answers. Scored on experience relevance, technical depth, and communication.

Questions generate dynamically without templates. Every interview is unique but the scoring stays consistent.