How Is AI-Augmented Software Development Improving Delivery at 10Clouds?

20.11.2025 | 5 min read

TL;DR

We use AI coding agents alongside human engineers to ship software faster, with fewer bugs, and less risk. AI agents tackle specific tasks like planning, scaffolding, and writing tests. Human engineers review and refine everything. Automated checks catch issues before anything goes live.

Our early pilot numbers: ~30% faster delivery, ~25% fewer bugs making it to production, and 40% less time spent in code review.

What this means for you: ship features faster, hit your deadlines more consistently, and reduce the chance of things breaking in production.

Who this is for: CTOs, Engineering Leaders, and Product Managers looking to leverage AI in their development process without losing control or quality.

Why Use AI-Augmented Software Development in the First Place?

The challenge is that the demand for software is accelerating, complexity is growing, and yet human capacity remains finite.

We realised we could use AI-based coding agents to take on more routine, well-defined chunks of work so humans can focus on product decisions, architecture trade-offs, and system resilience.

But fully handing over development to generative AI is risky: unseen dependencies, architecture pitfalls, code quality, and security issues. So we built a hybrid model: agent first pass + human-in-the-loop (HITL) gating + automated guardrails.

In effect, AI is the first draft, human is the editor-in-chief.

Research backs the approach. Atlassian’s HULA framework demonstrates that HITL agent workflows can reduce development time while retaining engineering control (Atlassian). Peer-reviewed research reinforces that human oversight ensures reliability, accountability, and quality in agentic systems (arXiv; DEV Community).

For clients this means faster delivery and maintained trust, because the human remains accountable and in control.

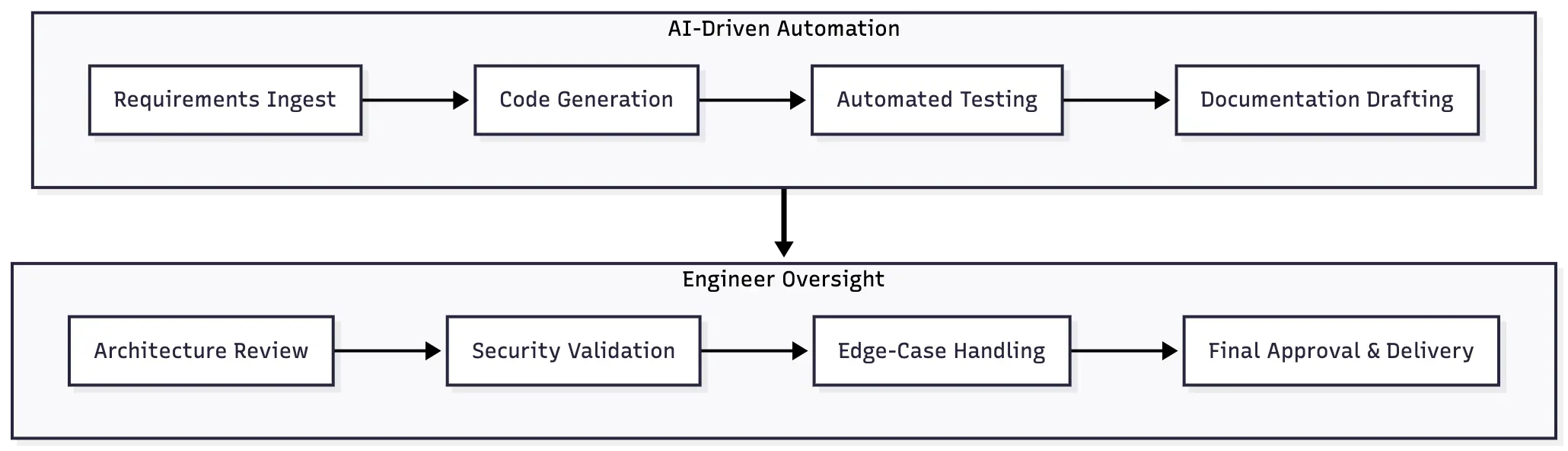

What Does Our AI-Augmented Development Workflow Actually Look Like?

Here’s how we run it, end to end:

- Intake & specification: A ticket or feature request enters our backlog (product owner + PM).

- Agent-planning: An agent analyses the spec, breaks it into tasks, writes acceptance tests (where applicable).

- Code-agent draft: The agent writes the scaffold, implements the initial code for defined units.

- Test-agent pass: The agent writes or updates unit tests/integration tests, ensures code path coverage where feasible.

- Human gate #1: A senior engineer reviews task breakdown, architecture deltas, branch plan and test strategy. Approves or asks revisions.

- CI & automated checks: On merge branch the pipeline runs static analysis, security scans, license checks, test pass/fail.

- PR summary & human gate #2: The agent raises a pull request with autogenerated summary + human reviewer takes final sign-off.

- Post-merge & rollout: Monitoring hooks track rollout, observability metrics, change-failure rates, MTTR.

- Continuous feedback loop: Agent performance and human reviewer comments feed into future agent improvements.

Human approval is mandatory at critical junctions.

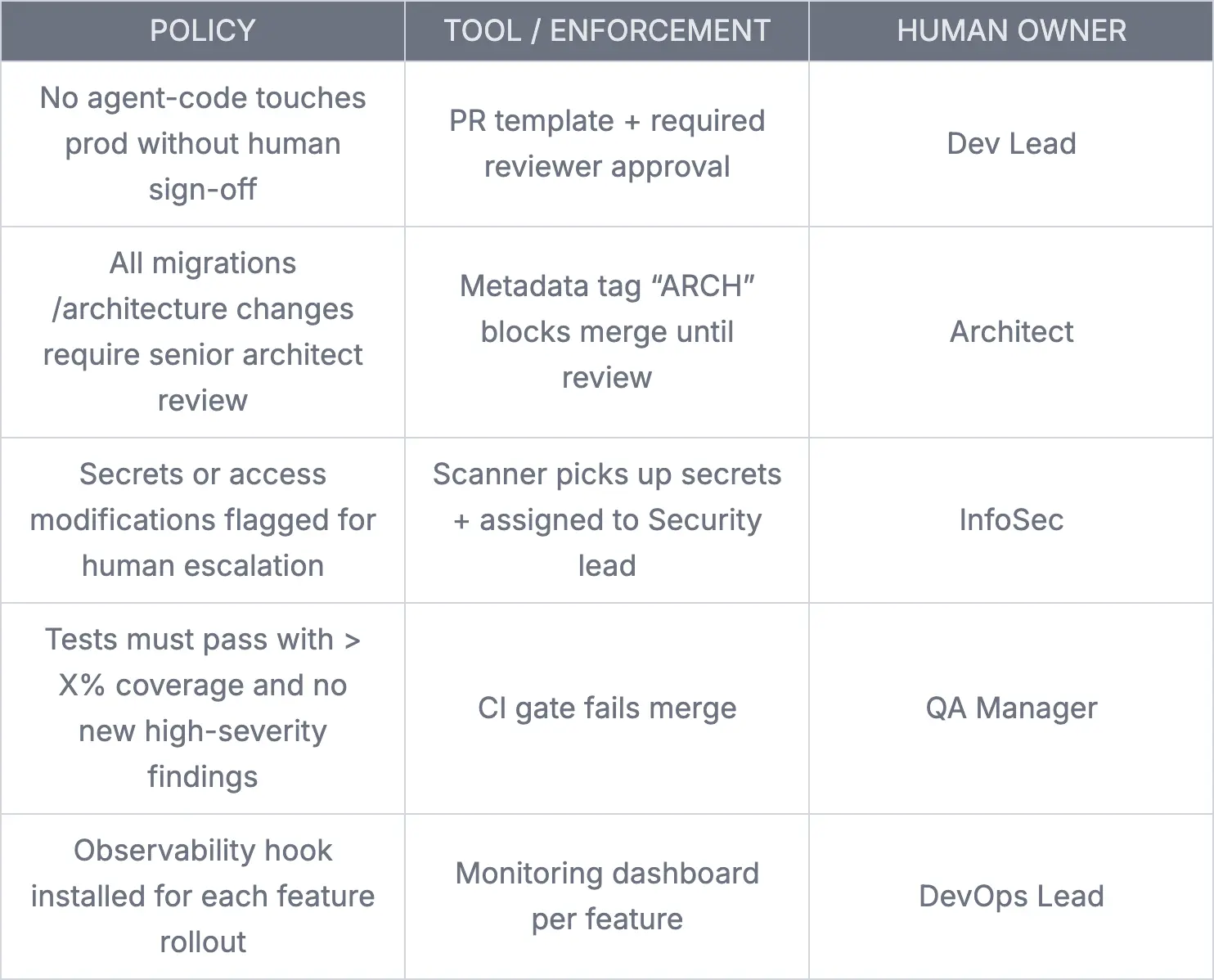

How Do We Ensure AI Never Ships Untrusted Code?

We don’t just hope oversight happens. We build frameworks, policy and tooling so it must happen. Here are our core guardrails:

By making these explicit, we avoid the “black box AI delivered code and we don’t know who owns it” scenario. Instead the human is always in the loop, the audit trail is clear, and accountability resides with someone.

What Evidence Shows AI-Augmented Software Engineering Works in the Real World?

When we introduced coding agents into our delivery process, we treated it like any engineering improvement: measure outcomes, validate assumptions, and listen to clients.

Here's what we've seen so far:

Real Client Results

“10Clouds has delivered functional features ahead of schedule and offers excellent support and consulting services. The team is willing to tackle project complexities, communicates effectively, and addresses issues transparently. Moreover, their AI expertise has boosted development efficiency.”

— Thomas Rubio, Founder & CEO at AI Agents Company

The takeaway: AI tools allowed closer collaboration, work optimization, faster throughput, and fewer surprises.

Industry Studies Confirm the Pattern

Independent engineering research supports the same benefits when AI agents work under human oversight:

• Human-in-the-loop agents reduce development effort and speed up planning and coding tasks

Source: Atlassian HULA study

• Human oversight is essential to maintain accountability and reliability in agentic workflows

Source: DEV Community

• Poor governance can increase code churn without improving quality

Source: arXiv (controlled studies on AI-coding agents)

We acknowledge those caveats, which is exactly why our approach includes strict human approval gates, automated testing policies, and security guardrails.

The takeaway: AI-powered software development process needs to follow best practices: human in the loop and data privacy.

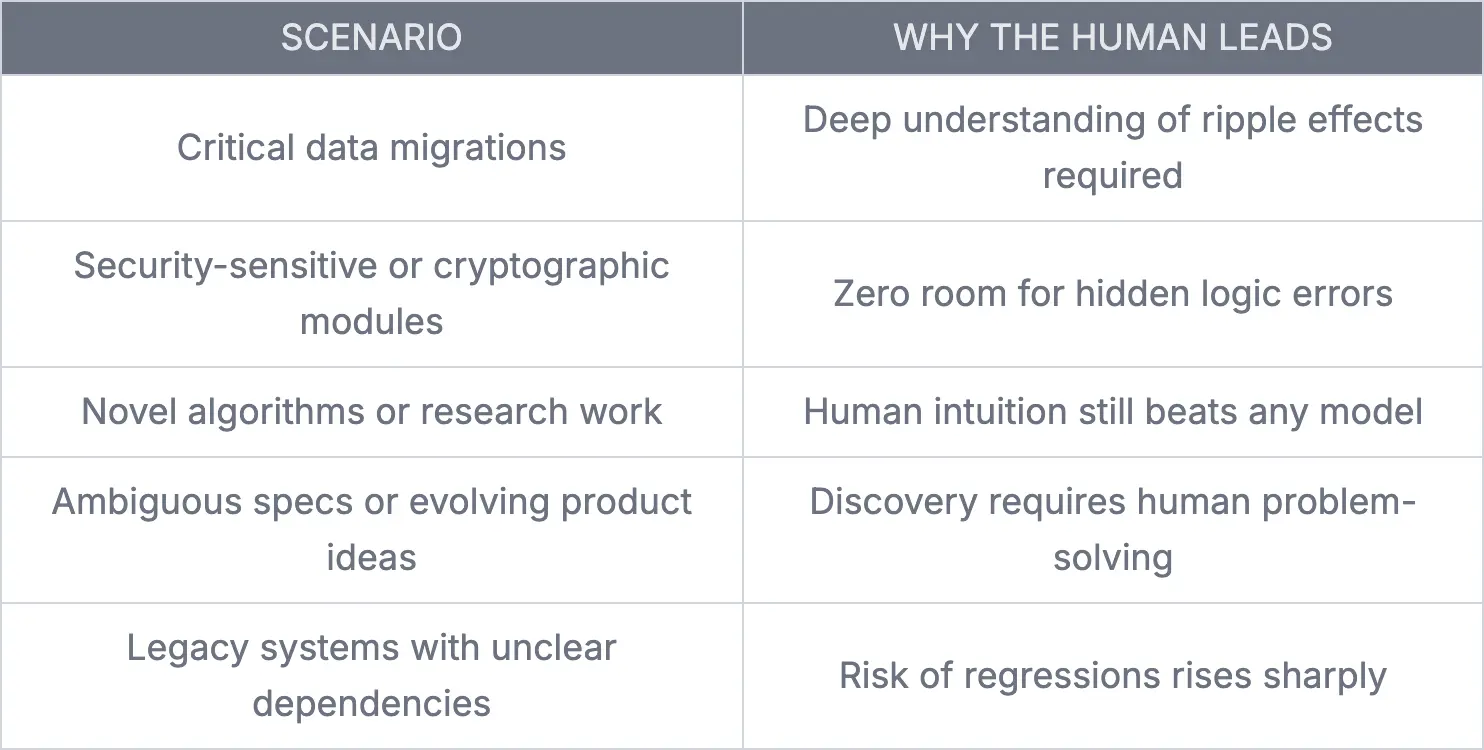

When Should Agentic AI Not Write Your Code?

There are times when speed is not the priority, and uncertainty is too high for automation to help. We are deliberate about where AI assists and where humans must lead.

We avoid agent-generated code in:

AI is empowering, but not omniscient.

Our rule of thumb: If expertise, judgment, or creativity defines the task, a human owns it.

That’s how we preserve safety, accountability, and architectural integrity while still reaping the benefits of coding assistants.

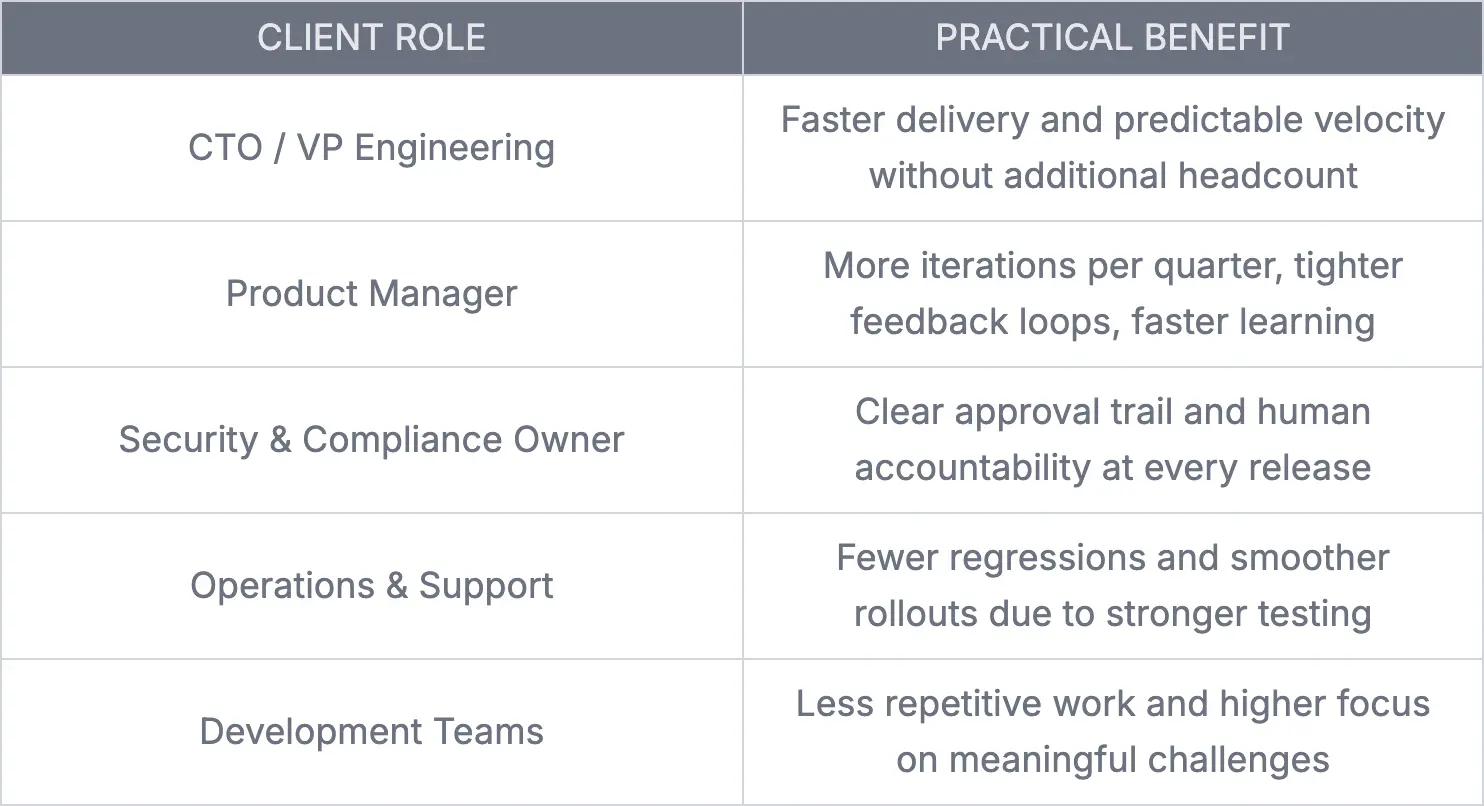

What Do Clients Actually Gain From AI-Powered Development?

Clients feel the impact long before they read any metrics. Our hybrid model changes the experience of working with a dev team.

They gain:

The outcome is always the same: Better software and streamlined deployment with transparency and control.

Final Word: 10Clouds as AI-Augmented Development Company With Humans at the Helm

AI isn't replacing our developers at 10Clouds, but it's transforming code generation and making them better at what they do. When we combine smart automation with human expertise, we deliver faster, build stronger products, and keep our team focused on what matters most. And our clients notice the difference.

If you want to see what AI-augmented development can do for your project, without giving up control, let's talk.

FAQ

Will AI agents produce low-quality or unreliable code?

Our AI-augmented software development workflow assigns agents only clear, well-defined coding tasks. All code goes through automated tests and human review before merge, ensuring quality and security stay high.

Who is responsible if something goes wrong?

Accountability always remains with the human software developer who approves the change. Every decision in the development lifecycle is traceable via PR history and audit logs.

How are credentials, secrets, or PII handled?

We automate scanning for sensitive data, but humans must approve any branch that modifies access. Artificial intelligence never pushes changes to security-critical areas without oversight.

What happens when an agent gets stuck or faces ambiguity?

Agentic AI only automates repetitive tasks. When confidence drops or a spec is unclear, the workflow escalates immediately to a human developer for intervention.

What kinds of tasks do you not use agents for?

We avoid AI-generated code for critical migrations, cryptography, complex architecture changes, and ambiguous requirements that demand human judgment.

Will this replace engineers?

No. AI-augmented development supports developers by removing repetitive work. Humans own architecture, business logic, and high-value software engineering decisions.

How do you measure success?

We track lead time, cycle time, escaped defects, and developer productivity. Monthly updates ensure our AI-driven improvements deliver better software faster.

What tools are involved?

We use standard development tools like GitHub or GitLab, CI pipelines with static and security scans, and internal orchestration for agentic workflows, plus observability for production validation.

How do you ensure the output stays aligned to business logic?

Agents generate code from defined acceptance tests and user stories. Human reviewers validate that functionality supports product outcomes before anything ships.

Can this apply to legacy or traditional software development?

Yes, with a readiness check: modularity, test coverage, and dependency clarity. If the foundation is weak, we phase AI integration gradually to protect quality and security.